Silicon Savanna: The workers taking on Africa's digital sweatshops

- By Erica Hellerstein

- Photography by Natalia Jidovanu

- Illustrations by Ann Kiernan

- feature

This story was updated at 6:30 ET on October 16, 2023

Wabe didn’t expect to see his friends’ faces in the shadows. But it happened after just a few weeks on the job.

He had recently signed on with Sama, a San Francisco-based tech company with a major hub in Kenya’s capital. The middle-man company was providing the bulk of Facebook’s content moderation services for Africa. Wabe, whose name we’ve changed to protect his safety, had previously taught science courses to university students in his native Ethiopia.

Why did we write this story?

The world’s biggest tech companies today have more power and money than many governments. This story offers a deep dive on court battles in Kenya that could jeopardize the outsourcing model upon which Meta has built its global empire.

Now, the 27-year-old was reviewing hundreds of Facebook photos and videos each day to decide if they violated the company’s rules on issues ranging from hate speech to child exploitation. He would get between 60 and 70 seconds to make a determination, sifting through hundreds of pieces of content over an eight-hour shift.

One day in January 2022, the system flagged a video for him to review. He opened up a Facebook livestream of a macabre scene from the civil war in his home country. What he saw next was dozens of Ethiopians being “slaughtered like sheep,” he said.

Then Wabe took a closer look at their faces and gasped. “They were people I grew up with,” he said quietly. People he knew from home. “My friends.”

Wabe leapt from his chair and stared at the screen in disbelief. He felt the room close in around him. Panic rising, he asked his supervisor for a five-minute break. “You don’t get five minutes,” she snapped. He turned off his computer, walked off the floor, and beelined to a quiet area outside of the building, where he spent 20 minutes crying by himself.

Wabe had been building a life for himself in Kenya while back home, a civil war was raging, claiming the lives of an estimated 600,000 people from 2020 to 2022. Now he was seeing it play out live on the screen before him.

That video was only the beginning. Over the next year, the job brought him into contact with videos he still can’t shake: recordings of people being beheaded, burned alive, eaten.

“The word evil is not equal to what we saw,” he said.

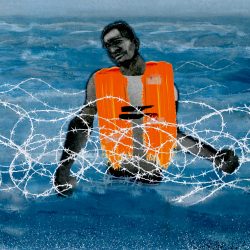

Yet he had to stay in the job. Pay was low — less than two dollars an hour, Wabe told me — but going back to Ethiopia, where he had been tortured and imprisoned, was out of the question. Wabe worked with dozens of other migrants and refugees from other parts of Africa who faced similar circumstances. Money was too tight — and life too uncertain — to speak out or turn down the work. So he and his colleagues kept their heads down and steeled themselves each day for the deluge of terrifying images.

Over time, Wabe began to see moderators as “soldiers in disguise” — a low-paid workforce toiling in the shadows to make Facebook usable for billions of people around the world. But he also noted a grim irony in the role he and his colleagues played for the platform’s users: “Everybody is safe because of us,” he said. “But we are not.”

Wabe said dozens of his former colleagues in Sama’s Nairobi offices now suffer from post-traumatic stress disorder. Wabe has also struggled with thoughts of suicide. “Every time I go somewhere high, I think: What would happen if I jump?” he wondered aloud. “We have been ruined. We were the ones protecting the whole continent of Africa. That’s why we were treated like slaves.”

To most people using the internet — most of the world — this kind of work is literally invisible. Yet it is a foundational component of the Big Tech business model. If social media sites were flooded with videos of murder and sexual assault, most people would steer clear of them — and so would the advertisers that bring the companies billions in revenue.

Around the world, an estimated 100,000 people work for companies like Sama, third-party contractors that supply content moderation services for the likes of Facebook’s parent company Meta, Google and TikTok. But while it happens at a desk, mostly on a screen, the demands and conditions of this work are brutal. Current and former moderators I met in Nairobi in July told me this work has left them with post-traumatic stress disorder, depression, insomnia and thoughts of suicide.

These “soldiers in disguise” are reaching a breaking point. Because of people like Wabe, Kenya has become ground zero in a battle over the future of content moderation in Africa and beyond. On one side are some of the most powerful and profitable tech companies on earth. On the other are young African content moderators who are stepping out from behind their screens and demanding that Big Tech companies reckon with the human toll of their enterprise.

In May, more than 150 moderators in Kenya, who keep the worst of the worst off of platforms like Facebook, TikTok and ChatGPT, announced their drive to create a trade union for content moderators across Africa. The union would be the first of its kind on the continent and potentially in the world.

There are also major pending lawsuits before Kenya’s courts targeting Meta and Sama. More than 180 content moderators — including Wabe — are suing Meta for $1.6 billion over poor working conditions, low pay and what they allege was unfair dismissal after Sama ended its content moderation agreement with Meta and Majorel picked up the contract instead. The plaintiffs say they were blacklisted from reapplying for their jobs after Majorel stepped in. In August, a judge ordered both parties to settle the case out of court, but the mediation broke down on October 16 after the plaintiffs' attorneys accused Meta of scuttling the negotiations and ignoring moderators' requests for mental health services and compensation. The lawsuit will now proceed to Kenya's employment and labor relations court, with an upcoming hearing scheduled for October 31.

The cases against Meta are unprecedented. According to Amnesty International, it is the “first time that Meta Platforms Inc will be significantly subjected to a court of law in the global south.” Forthcoming court rulings could jeopardize Meta’s status in Kenya and the content moderation outsourcing model upon which it has built its global empire.

Meta did not respond to requests for comment about moderators’ working conditions and pay in Kenya. In an emailed statement, a spokesperson for Sama said the company cannot comment on ongoing litigation but is “pleased to be in mediation” and believes “it is in the best interest of all parties to come to an amicable resolution.”

Odanga Madung, a Kenya-based journalist and a fellow at the Mozilla Foundation, believes the flurry of litigation and organizing marks a turning point in the country’s tech labor trajectory.

“This is the tech industry’s sweatshop moment,” Madung said. “Every big corporate industry here — oil and gas, the fashion industry, the cosmetics industry — have at one point come under very sharp scrutiny for the reputation of extractive, very colonial type practices.”

Nairobi may soon witness a major shift in the labor economics of content moderation. But it also offers a case study of this industry’s powerful rise. The vast capital city — sometimes called “Silicon Savanna” — has become a hub for outsourced content moderation jobs, drawing workers from across the continent to review material in their native languages. An educated, predominantly English-speaking workforce makes it easy for employers from overseas to set up satellite offices in Kenya. And the country’s troubled economy has left workers desperate for jobs, even when wages are low.

Sameer Business Park, a massive office compound in Nairobi’s industrial zone, is home to Nissan, the Bank of Africa, and Sama’s local headquarters. But just a few miles away lies one of Nairobi’s largest informal settlements, a sprawl of homes made out of scraps of wood and corrugated tin. The slum’s origins date back to the colonial era, when the land it sits on was a farm owned by white settlers. In the 1960s, after independence, the surrounding area became an industrial district, attracting migrants and factory workers who set up makeshift housing on the area adjacent to Sameer Business Park.

For companies like Sama, the conditions here were ripe for investment by 2015, when the firm established a business presence in Nairobi. Headquartered in San Francisco, the self-described “ethical AI” company aims to “provide individuals from marginalized communities with training and connections to dignified digital work.” In Nairobi, it has drawn its labor from residents of the city’s informal settlements, including 500 workers from Kibera, one of the largest slums in Africa. In an email, a Sama spokesperson confirmed moderators in Kenya made between $1.46 and $3.74 per hour after taxes.

Grace Mutung’u, a Nairobi-based digital rights researcher at Open Society Foundations, put this into local context for me. On the surface, working for a place like Sama seemed like a huge step up for young people from the slums, many of whom had family roots in factory work. It was less physically demanding and more lucrative. Compared to manual labor, content moderation “looked very dignified,” Mutung’u said. She recalled speaking with newly hired moderators at an informal settlement near the company’s headquarters. Unlike their parents, many of them were high school graduates, thanks to a government initiative in the mid-2000s to get more kids in school.

“These kids were just telling me how being hired by Sama was the dream come true,” Mutung’u told me. “We are getting proper jobs, our education matters.” These younger workers, Mutung’u continued, “thought: ‘We made it in life.’” They thought they had left behind the poverty and grinding jobs that wore down their parents’ bodies. Until, she added, “the mental health issues started eating them up.”

Today, 97% of Sama’s workforce is based in Africa, according to a company spokesperson. And despite its stated commitment to providing “dignified” jobs, it has caught criticism for keeping wages low. In 2018, the company’s late founder argued against raising wages for impoverished workers from the slum, reasoning that it would “distort local labor markets” and have “a potentially negative impact on the cost of housing, the cost of food in the communities in which our workers thrive.”

Content moderation did not become an industry unto itself by accident. In the early days of social media, when “don’t be evil” was still Google’s main guiding principle and Facebook was still cheekily aspiring to connect the world, this work was performed by employees in-house for the Big Tech platforms. But as companies aspired to grander scales, seeking users in hundreds of markets across the globe, it became clear that their internal systems couldn’t stem the tide of violent, hateful and pornographic content flooding people’s newsfeeds. So they took a page from multinational corporations’ globalization playbook: They decided to outsource the labor.

More than a decade on, content moderation is now an industry that is projected to reach $40 billion by 2032. Sarah T. Roberts, a professor of information studies at the University of California at Los Angeles, wrote the definitive study on the moderation industry in her 2019 book “Behind the Screen.” Roberts estimates that hundreds of companies are farming out these services worldwide, employing upwards of 100,000 moderators. In its own transparency documents, Meta says that more than 15,000 people moderate its content in more than 20 sites around the world. Some (it doesn’t say how many) are full-time employees of the social media giant, while others (it doesn’t say how many) work for the company’s contracting partners.

Kauna Malgwi was once a moderator with Sama in Nairobi. She was tasked with reviewing content on Facebook in her native language, Hausa. She recalled watching coworkers scream, faint and develop panic attacks on the office floor as images flashed across their screens. Originally from Nigeria, Malgwi took a job with Sama in 2019, after coming to Nairobi to study psychology. She told me she also signed a nondisclosure agreement instructing her that she would face legal consequences if she told anyone she was reviewing content on Facebook. Malgwi was confused by the agreement, but moved forward anyway. She was in graduate school and needed the money.

Sleep became a struggle.

Her appetite vanished.

She sent me a picture of herself at that weight and she looks like a different person, so tiny she could snap in half.

She began to have panic attacks that she attributes to the “psychological pain” of her job.

A 28-year-old moderator named Johanna described a similar decline in her mental health after watching TikTok videos of rape, child sexual abuse, and even a woman ending her life in front of her own children. Johanna currently works with the outsourcing firm Majorel, reviewing content on TikTok, and asked that we identify her using a pseudonym, for fear of retaliation by her employer. She told me she’s extroverted by nature, but after a few months at Majorel, she became withdrawn and stopped hanging out with her friends. Now, she dissociates to get through the day at work. “You become a different person,” she told me. “I’m numb.”

This is not the experience that the Luxembourg-based multinational — which employs more than 22,000 people across the African continent — touts in its recruitment materials. On a page about its content moderation services, Majorel’s website features a photo of a woman donning a pair of headphones and laughing. It highlights the company’s “Feel Good” program, which focuses on “team member wellbeing and resiliency support.”

According to the company, these resources include 24/7 psychological support for employees “together with a comprehensive suite of health and well-being initiatives that receive high praise from our people," Karsten König, an executive vice president at Majorel, said in an emailed statement. "We know that providing a safe and supportive working environment for our content moderators is the key to delivering excellent services for our clients and their customers. And that’s what we strive to do every day.”

But Majorel’s mental health resources haven’t helped ease Johanna’s depression and anxiety. She says the company offers moderators in her Nairobi office with on-site therapists who see employees in individual and group “wellness” sessions. But Johanna told me she stopped attending the individual sessions after her manager approached her about a topic she shared in confidentiality with her therapist. “They told me it was a safe space,” Johanna explained, “but I feel that they breached that part of the confidentiality so I do not do individual therapy.” TikTok did not respond to a request for comment by publication.

Instead, she looked for other ways to make herself feel better. Nature has been especially healing. Whenever she can, Johanna takes herself to Karura Forest, a lush oasis in the heart of Nairobi. One afternoon, she brought me to one of her favorite spots there, a crashing waterfall beneath a canopy of trees. This is where she tries to forget about the images that keep her up at night.

Johanna remains haunted by a video she reviewed out of Tanzania, where she saw a lesbian couple attacked by a mob, stripped naked and beaten. She thought of them again and again for months. “I wondered: ‘How are they? Are they dead right now?’” At night, she would lie awake in her bed, replaying the scene in her mind.

“I couldn’t sleep, thinking about those women.”

Johanna’s experience lays bare another stark reality of this work. She was powerless to help victims. Yes, she could remove the video in question, but she couldn’t do anything to bring the women who were brutalized to safety. This is a common scenario for content moderators like Johanna, who are not only seeing these horrors in real-time, but are asked to simply remove them from the internet and, by extension, perhaps, from public record. Did the victims get help? Were the perpetrators brought to justice? With the endless flood of videos and images waiting for review, questions like these almost always go unanswered.

The situation that Johanna encountered highlights what David Kaye, a professor of law at the University of California at Irvine and the former United Nations special rapporteur on freedom of expression, believes is one of the platforms’ major blindspots: “They enter into spaces and countries where they have very little connection to the culture, the context and the policing,” without considering the myriad ways their products could be used to hurt people. When platforms introduce new features like livestreaming or new tools to amplify content, Kaye continued, “are they thinking through how to do that in a way that doesn’t cause harm?”

The question is a good one. For years, Meta CEO Mark Zuckerberg famously urged his employees to “move fast and break things,” an approach that doesn’t leave much room for the kind of contextual nuance that Kaye advocates. And history has shown the real-world consequences of social media companies’ failures to think through how their platforms might be used to foment violence in countries in conflict.

The most searing example came from Myanmar in 2017, when Meta famously looked the other way as military leaders used Facebook to incite hatred and violence against Rohingya Muslims as they ran “clearance operations” that left an estimated 24,000 Rohingya people dead and caused more than a million to flee the country. A U.N. fact-finding mission later wrote that Facebook had a “determining role” in the genocide. After commissioning an independent assessment of Facebook’s impact in Myanmar, Meta itself acknowledged that the company didn’t do “enough to help prevent our platform from being used to foment division and incite offline violence. We agree that we can and should do more.”

Yet five years later, another case now before Kenya’s high court deals with the same issue on a different continent. Last year, Meta was sued by a group of petitioners including the family of Meareg Amare Abrha, an Ethiopian chemistry professor who was assassinated in 2021 after people used Facebook to orchestrate his killing. Amare’s son tried desperately to get the company to take down the posts calling for his father’s head, to no avail. He is now part of the suit that accuses Meta of amplifying hateful and malicious content during the conflict in Tigray, including the posts that called for Amare’s killing.

The case underlines the strange distance between Big Tech behemoths and the content moderation industry that they’ve created offshore, where the stakes of moderation decisions can be life or death. Paul Barrett, the deputy director of the Center for Business and Human Rights at New York University's Stern School of Business who authored a seminal 2020 report on the issue, believes this distance helped corporate leadership preserve their image of a shiny, frictionless world of tech. Social media was meant to be about abundant free speech, connecting with friends and posting pictures from happy hour — not street riots or civil war or child abuse.

“This is a very nitty gritty thing, sifting through content and making decisions,” Barrett told me. “They don't really want to touch it or be in proximity to it. So holding this whole thing at arm’s length as a psychological or corporate culture matter is also part of this picture.”

Sarah T. Roberts likened content moderation to “a dirty little secret. It’s been something that people in positions of power within the companies wish could just go away,” Roberts said. This reluctance to deal with the messy realities of human behavior online is evident today, even in statements from leading figures in the industry. For example, with the July launch of Threads, Meta’s new Twitter-like social platform, in July, Instagram head Adam Mosseri expressed a desire to keep “politics and hard news” off the platform.

The decision to outsource content moderation meant that this part of what happened on social media platforms would “be treated at arm’s length and without that type of oversight and scrutiny that it needs,” Barrett said. But the decision had collateral damage. In pursuit of mass scale, Meta and its counterparts created a system that produces an impossible amount of material to oversee. By some estimates, three million items of content are reported on Facebook alone on a daily basis. And despite what some of Silicon Valley’s other biggest names tell us, artificial intelligence systems are insufficient moderators. So it falls on real people to do the work.

One morning in late July, James Oyange, a former tech worker, took me on a driving tour of Nairobi’s content moderation hubs. Oyange, who goes by Mojez, is lanky and gregarious, quick to offer a high five and a custom-made quip. We pulled up outside a high-rise building in Westlands, a bustling central neighborhood near Nairobi’s business district. Mojez pointed up to the sixth floor: Majorel’s local office, where he worked for nine months, until he was let go.

He spent much of his year in this building. Pay was bad and hours were long, and it wasn’t the customer service job he’d expected when he first signed on — this is something he brought up with managers early on. But the 26-year-old grew to feel a sense of duty about the work. He saw the job as the online version of a first responder — an essential worker in the social media era, cleaning up hazardous waste on the internet. But being the first to the scene of the digital wreckage changed Mojez, too — the way he looks, the way he sleeps, and even his life’s direction.

That morning, as we sipped coffee in a trendy, high-ceilinged cafe in Westlands, I asked how he’s holding it together. “Compared to some of the other moderators I talked to, you seem like you’re doing okay,” I remarked. “Are you?”

“Before I started the job, it was easy for me to get sleep,” he explained.

He developed a recurrent nightmare that he was one of the 12.

“It came for me in my dreams.”

His days often started bleary-eyed. When insomnia got the best of him, he would force himself to go running under the pitch-black sky, circling his neighborhood for 30 minutes and then stretching in his room as the darkness lifted. At dawn, he would ride the bus to work, snaking through Nairobi’s famously congested roads until he arrived at Majorel’s offices. A food market down the street offered some moments of relief from the daily grind. Mojez would steal away there for a snack or lunch. His vendor of choice doled out tortillas stuffed with sausage. He was often so exhausted by the end of the day that he nodded off on the bus ride home.

And then, in April 2023, Majorel told him that his contract wouldn’t be renewed.

It was a blow. Mojez walked into the meeting fantasizing about a promotion. He left without a job. He believes he was blacklisted by company management for speaking up about moderators’ low pay and working conditions.

A few weeks later, an old colleague put him in touch with Foxglove, a U.K.-based legal nonprofit supporting the lawsuit currently in mediation against Meta. The organization also helped organize the May meeting in which more than 150 African content moderators across platforms voted to unionize.

At the event, Mojez was stunned by the universality of the challenges facing moderators working elsewhere. He realized: “This is not a Mojez issue. These are 150 people across all social media companies. This is a major issue that is affecting a lot of people.” After that, despite being unemployed, he was all in on the union drive. Mojez, who studied international relations in college, hopes to do policy work on tech and data protection someday. But right now his goal is to see the effort through, all the way to the union’s registry with Kenya’s labor department.

Mojez’s friend in the Big Tech fight, Wabe, also went to the May meeting. Over lunch one afternoon in Nairobi in July, he described what it was like to open up about his experiences publicly for the first time. “I was happy,” he told me. “I realized I was not alone.” This awareness has made him more confident about fighting “to make sure that the content moderators in Africa are treated like humans, not trash,” he explained. He then pulled up a pant leg and pointed to a mark on his calf, a scar from when he was imprisoned and tortured in Ethiopia. The companies, he said, “think that you are weak. They don’t know who you are, what you went through.”

Looking at Kenya’s economic woes, you can see why these jobs were so alluring. My visit to Nairobi coincided with a string of July protests that paralyzed the city. The day I flew in, it was unclear if I would be able to make it from the airport to my hotel — roads, businesses and public transit were threatening to shut down in anticipation of the unrest. The demonstrations, which have been bubbling up every so often since last March, came in response to steep new tax hikes, but they were also about the broader state of Kenya’s faltering economy — soaring food and gas prices and a youth unemployment crisis, some of the same forces that drive throngs of young workers to work for outsourcing companies and keep them there.

Leah Kimathi, a co-founder of the Kenyan nonprofit Council for Responsible Social Media, believes Meta’s legal defense in the labor case brought by the moderators betrays Big Tech’s neo-colonial approach to business in Kenya. When the petitioners first filed suit, Meta tried to absolve itself by claiming that it could not be brought to trial in Kenya, since it has no physical offices there and did not directly employ the moderators, who were instead working for Sama, not Meta. But a Kenyan labor court saw it differently, ruling in June that Meta — not Sama — was the moderators’ primary employer and the case against the company could move forward.

“So you can come here, roll out your product in a very exploitative way, disregarding our laws, and we cannot hold you accountable,” Kimathi said of legal Meta’s argument. “Because guess what? I am above your laws. That was the exact colonial logic.”

Kimathi continued: “For us, sitting in the Global South, but also in Africa, we’re looking at this from a historical perspective. Energetic young Africans are being targeted for content moderation and they come out of it maimed for life. This is reminiscent of slavery. It’s just now we’ve moved from the farms to offices.”

As Kimathi sees it, the multinational tech firms and their outsourcing partners made one big, potentially fatal miscalculation when they set up shop in Kenya: They didn’t anticipate a workers’ revolt. If they had considered the country’s history, perhaps they would have seen the writing of the African Content Moderator’s Union on the wall.

Kenya has a rich history of worker organizing in resistance to the colonial state. The labor movement was “a critical pillar of the anti-colonial struggle,” Kimathi explained to me. She and other critics of Big Tech’s operations in Kenya see a line that leads from colonial-era labor exploitation and worker organizing to the present day. A workers’ backlash was a critical part of that resistance — and one the Big Tech platforms and their outsourcers may have overlooked when they decided to do business in the country.

“They thought that they would come in and establish this very exploitative industry and Kenyans wouldn’t push back,” she said. Instead, they sued.

What happens if the workers actually win?

Foxglove, the nonprofit supporting the moderators’ legal challenge against Meta, writes that the outcome of the case could disrupt the global content moderation outsourcing model. If the court finds that Meta is the “‘true employer’ of their content moderators in the eyes of the law,” Foxglove argues, “then they cannot hide behind middlemen like Sama or Majorel. It will be their responsibility, at last, to value and protect the workers who protect social media — and who have made tech executives their billions.”

But there is still a long road ahead, for the moderators themselves and for the kinds of changes to the global moderation industry that they are hoping to achieve.

In Kenya, the workers involved in the lawsuit and union face practical challenges. Some, like Mojez, are unemployed and running out of money. Others are migrant workers from elsewhere on the continent who may not be able to stay in Kenya for the duration of the lawsuit or union fight.

The Moderator’s Union is not yet registered with Kenya’s labor office, but if it becomes official, its members intend to push for better conditions for moderators working across platforms in Kenya, including higher salaries and more psychological support for the trauma endured on the job. And their ambitions extend far beyond Kenya. The network hopes to inspire similar actions in other countries’ content moderation hubs. According to Martha Dark, Foxglove’s co-founder and director, the industry’s working conditions have spawned a cross-border, cross-company organizing effort, drawing employees from Africa, Europe and the U.S.

“There are content moderators that are coming together from Poland, America, Kenya, and Germany talking about what the challenges are that they experience when trying to organize in the context of working for Big Tech companies like Facebook and TikTok,” she explained.

Still, there are big questions that might hinge on the litigation’s ability to transform the moderation industry. “It would be good if outsourced content reviewers earned better pay and were better treated,” NYU’s Paul Barrett told me. “But that doesn't get at the issue that the mother companies here, whether it’s Meta or anybody else, is not hiring these people, is not directly training these people and is not directly supervising these people.” Even if the Kenyan workers are victorious in their lawsuit against Meta, and the company is stung in court, “litigation is still litigation,” Barrett explained. “It’s not the restructuring of an industry.”

So what would truly reform the moderation industry’s core problem? For Barrett, the industry will only see meaningful change if companies can bring “more, if not all of this function in-house.”

But Sarah T. Roberts, who interviewed workers from Silicon Valley to the Philippines for her book on the global moderation industry, believes collective bargaining is the only pathway forward for changing the conditions of the work. She dedicated the end of her book to the promise of organized labor.

“The only hope is for workers to push back,” she told me. “At some point, people get pushed too far. And the ownership class always underestimates it. Why does Big Tech want everything to be computational in content moderation? Because AI tools don’t go on strike. They don't talk to reporters.”

Subscribe to our Authoritarian Tech newsletter

From biometrics to surveillance — when people in power abuse technology, the rest of us suffer. Written by Ellery Biddle.

Artificial intelligence is part of the content moderation industry, but it will probably never be capable of replacing human moderators altogether. What we do know is that AI models will continue to rely on human beings to train and oversee their data sets — a reality Sama’s CEO recently acknowledged. For now and the foreseeable future, there will still be people behind the screen, fueling the engines of the world’s biggest tech platforms. But because of people like Wabe and Mojez and Kauna, their work is becoming more visible to the rest of us.

While writing this piece, I kept returning to one scene from my trip to Nairobi that powerfully drove home the raw humanity at the base of this entire industry, powering the whole system, as much as the tech scions might like to pretend otherwise. I was in the food court of a mall, sitting with Malgwi and Wabe. They were both dressed sharply, like they were on break from the office: Malgwi in a trim pink dress and a blazer, Wabe in leather boots and a peacoat. But instead, they were just talking about how work ruined them.

At one point in the conversation, Wabe told me he was willing to show me a few examples of violent videos he snuck out while working for Sama and later shared with his attorney. If I wanted to understand “exactly what we see and moderate on the platform,” Wabe explained, the opportunity was right in front of me. All I had to do was say yes.

I hesitated. I was genuinely curious. A part of me wanted to know, wanted to see first-hand what he had to deal with for more than a year. But I’m sensitive, maybe a little breakable. A lifelong insomniac. Could I handle seeing this stuff? Would I ever sleep again?

It was a decision I didn’t have to make. Malgwi intervened. “Don’t send it to her,” she told Wabe. “It will traumatize her.”

So much of this story, I realized, came down to this minute-long exchange. I didn’t want to see the videos because I was afraid of how they might affect me. Malgwi made sure I didn’t have to. She already knew what was on the other side of the screen.

The story you just read is a small piece of a complex and an ever-changing storyline that Coda covers relentlessly and with singular focus. But we can’t do it without your help. Show your support for journalism that stays on the story by becoming a member today. Coda Story is a 501(c)3 U.S. non-profit. Your contribution to Coda Story is tax deductible.

The Big Idea

Shifting Borders

-

When your body becomes the border

feature Erica Hellerstein

-

India and China draw a line in the snow

feature Shougat Dasgupta

-

How an EU-funded agency is working to keep migrants from reaching Europe

feature Zach Campbell and Lorenzo D'Agostino

-

Turkey uses journalists to silence critics in exile

feature Frankie Vetch